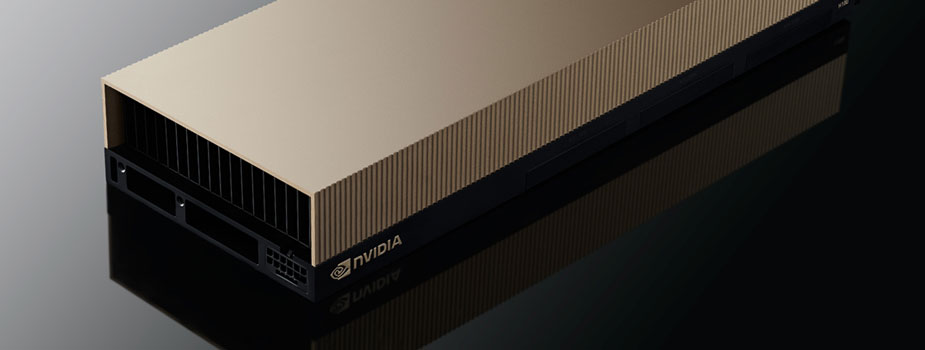

NVIDIA H100, the Most Powerful GPU for AI and HPC is here.

The NVIDIA® H100 Tensor Core GPU powered by the Hopper architecture delivers the next massive leap in our accelerated compute data center platform, securely accelerating diverse workloads from small enterprise workloads to exascale HPC and trillion parameter AI in every data center. It enables these brilliant innovators to fulfill their life's work at the fastest pace ever in human history.

The Silicon Mechanics Difference

Silicon Mechanics has a wide variety of systems that support the NVIDIA H100 in various form factors, GPU densities, and storage capacities. Our team of system design experts has hand-selected systems from a variety of manufacturers that we believe best support the breadth and depth of our clients' needs. Each system is highly configurable with components from industry-leading technology providers.

View systems that support NVIDIA H100

The list of systems that support the NVIDIA H100 is constantly growing, so visit these pages to see what systems are available today.

Learn More about the NVIDIA H100

H100 is bringing massive amounts of compute to data centers. To fully utilize that compute performance, H100 is the world’s first GPU with HBM3 memory with a class-leading 3 terabytes per second (TB/sec) of memory bandwidth. H100 is also the first GPU to support PCIe Gen5, providing the highest speeds possible at 128GB/s (bi-directional). This fast communication enables optimal connectivity with the highest performing CPUs, as well as with NVIDIA ConnectX-7 SmartNICs and BlueField-3 DPUs, which allow up to 400Gb/s Ethernet or NDR 400Gb/s InfiniBand networking acceleration for secure HPC and AI workloads.

The most powerful GPU for AI and HPC workflows

As workloads explode in complexity there’s a need for multiple GPUs to work together with extremely fast communication between them. NVIDIA H100 leverages new PCI-e Gen 5 interconnects to improve GPU-to-GPU and GPU-to-CPU bandwidth. If leveraging NVLink or NVSwitch, multiple H100 GPUs enable the creation of the world’s most powerful scale-up servers.

NVIDIA H100s are available as a server building block in the form of integrated baseboards in four or eight H100 GPU configurations. Leveraging the power of H100 multi-precision Tensor Cores, an 8-way HGX H100 provides over 32 petaFLOPS of FP8 deep learning compute performance. This performance density is critical to powering the most demanding workloads in HPC and AI today.

Key Features:

- H100 is the first GPU to support PCIe Gen5, providing 128GB/s (bi-directional)

- H100 is the world’s first GPU with HBM3 memory, providing 3TB/sec of memory bandwidth

- An 8GPU H100 system provides up to 32 petaFLOPS of FP8 deep learning compute performance

About Silicon Mechanics

Silicon Mechanics, Inc. is one of the world’s largest private providers of high-performance computing (HPC), artificial intelligence (AI), and enterprise storage solutions. Since 2001, Silicon Mechanics’ clients have relied on its custom-tailored open-source systems and professional services expertise to overcome the world’s most complex computing challenges. With thousands of clients across the aerospace and defense, education/research, financial services, government, life sciences/healthcare, and oil and gas sectors, Silicon Mechanics solutions always come with “Expert Included” SM.

Expert Included

Our engineers are not only experts in traditional HPC and AI technologies, we also routinely build complex rack-scale solutions with today's newest innovations so that we can design and build the best solution for your unique needs.

Talk to an engineer and see how we can help solve your computing challenges today.