NVIDIA GB200 NVL72

Scalable AI Infrastructure for the Exascale Era

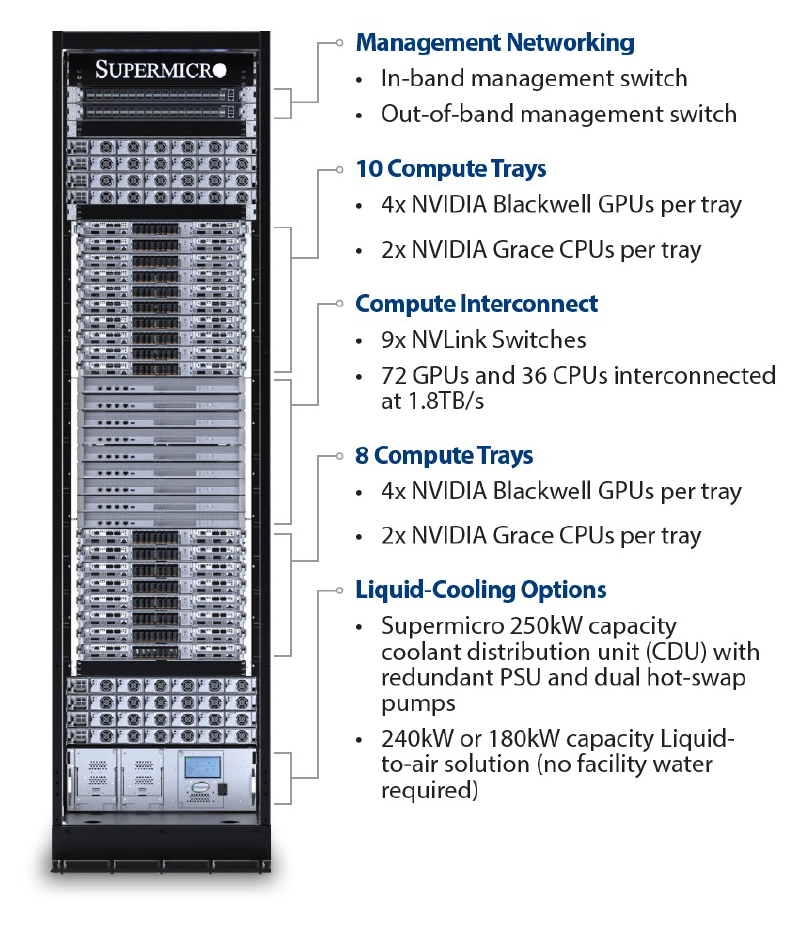

The NVIDIA GB200 NVL72 is a rack-scale platform purpose-built for high-performance AI infrastructure. With 36 Grace CPUs and 72 Blackwell GPUs connected via high-speed NVLink, this unified system delivers breakthrough performance for multi-trillion parameter model training, real-time inference, and advanced HPC workloads.

Full-Lifecycle Support from Silicon Mechanics

When you purchase the NVL72 through Silicon Mechanics, our engineering team works with you to determine the right system configuration based on your workload, power, and cooling requirements. We support a smooth deployment process and provide ongoing, U.S.-based technical support to help you maintain system performance and adapt to future needs.

NVL72 Highlights

Core Architecture

- Unified Compute Fabric:

72 Blackwell B200 GPUs and 36 NVIDIA Grace CPUs interconnected via 5th-gen NVLink, delivering up to 1.8 TB/s GPU-to-GPU bandwidth across the system. - GB200 Grace™ Blackwell Superchip Design:

Each superchip integrates two Blackwell GPUs and one Grace CPU using NVLink-C2C, enabling cohesive memory sharing and ultra-low-latency communication. - Acts as a Single GPU:

NVL72 is designed to function as one massive GPU, offering up to 30× faster inference for trillion-parameter LLMs compared to previous-generation systems.

Cooling & Efficiency

- Direct-to-Chip Liquid Cooling (DLC):

Each rack includes a 250kW-capable coolant distribution unit (CDU) with redundant PSUs and hot-swappable pumps, eliminating thermal bottlenecks and preventing performance throttling. - Energy Savings:

DLC architecture reduces electricity costs by up to 40% over traditional air-cooled systems. - Flexible Deployment:

For facilities without chilled water infrastructure, optional liquid-to-air cooling solutions support environments without facility water supply.

Workload Optimization

- Large-Scale Model Training (e.g., LLMs, foundation models)

- Real-Time Generative AI Inference

- Scientific Simulations & HPC

- Enterprise Analytics

- Synthetic Media and Digital Content Generation

GB200 NVL72 Rack-Scale Configuration

Why Choose Silicon Mechanics for NVL72?

- Workload-first system planning: We start with your specific AI or HPC workload and infrastructure profile, then recommend a configuration that meets your performance targets without excess capacity or unnecessary complexity.

- Tailored integration with your environment: Our team accounts for your facility’s power, cooling, and rack constraints to ensure your NVL72 system fits cleanly into your existing setup — no guesswork, no surprises.

- Ongoing engineering collaboration: From deployment through scaling and workload evolution, we stay involved to help you get the most out of your system and plan for what’s next.

- Reliable, U.S.-based technical support: You’ll have access to experienced support engineers based in the U.S., available throughout the system lifecycle to troubleshoot issues, advise on upgrades, and help maintain peak performance.